Suggestive Drawing Along Human and Artificial Intelligences

by Nono Martínez Alonso (MDes ’17)

We use sketching as a medium to represent the world. Design software has enabled drawing features not available on physical mediums. Layers, copy-paste, or the undo function, for instance, make otherwise tedious and repetitive drawing tasks trivial. Digital devices map actions into accurate drawings but are unable to participate in how we represent the world as they don’t have a way to perceive it.

In recent years, the field of artificial intelligence has experienced a boom—mainly due to the availability of faster, cheaper, and more powerful parallel processing power and the rapidly growing flood of data of every kind. By processing thousands of pictures, a computer is able to learn about certain aspects of the world and make decisions in a way that resembles the human way of thinking. This presents an opportunity to teach machines how to draw—by observing images and sketches of real objects—so they can be participants in the drawing process and not just mere transcribers.

I propose an environment for suggestive drawing—to draw and suggest edits to transform, continue, analyze, or even rationalize a drawing—through spatial and temporal delegation of responsibility along human and computational intelligences, in which characterized, intelligent, learning machines with non-deterministic and semi-autonomous behaviors provide meaningful drawing suggestions. Taking the idea of the digital tool one step further, in an attempt to escape the point-and-click paradigm of deterministic machines toward fuzzier commands, and augmenting a familiar medium—pencil and paper—with an interface to artificial intelligences.

I believe this approach will enable a new branch of creative mediation with machines that can develop their own aesthetics and gather knowledge from previously seen images and representations of the world.

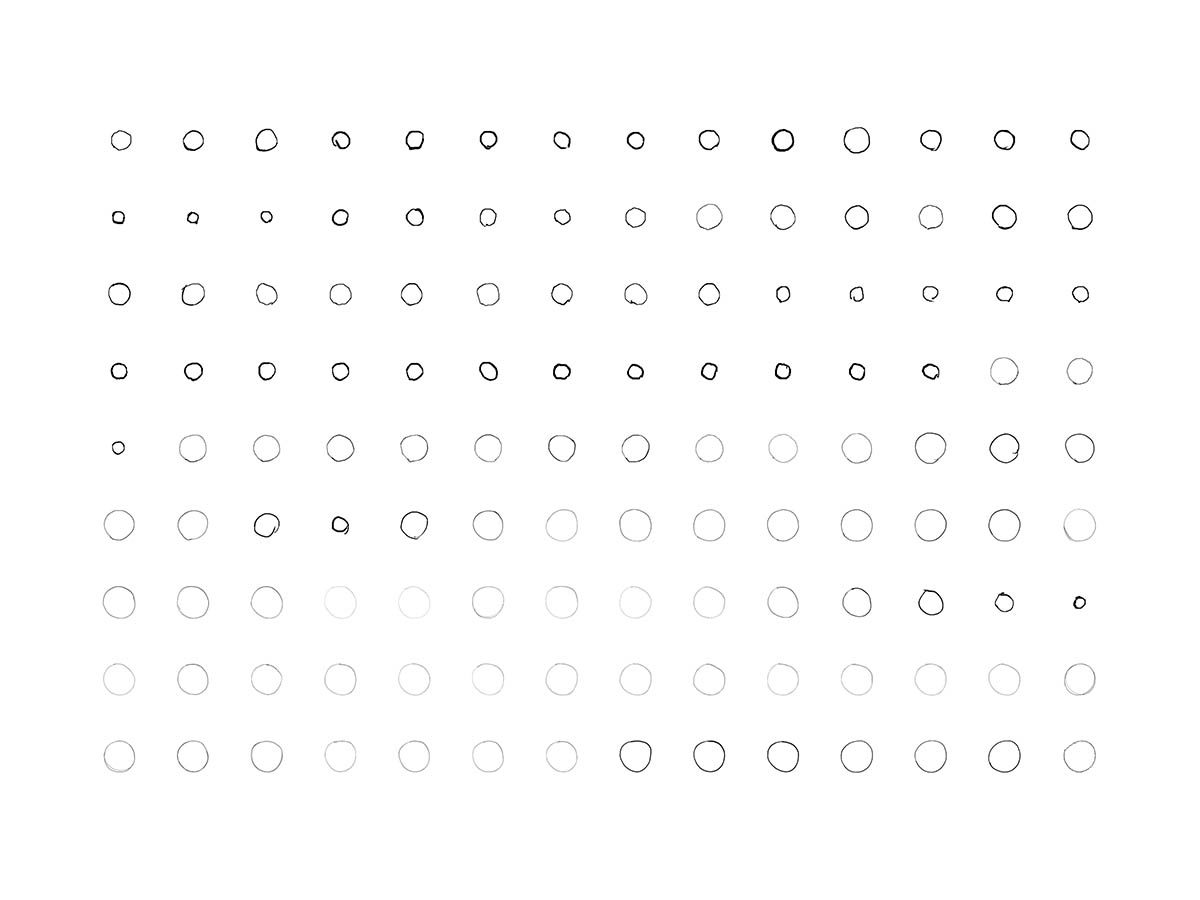

Sketcher bot uses pix2pix trained on hand-sketches of trees and urban scenes. The tree training set consists of sixty four hand-drawn trees by an artist who collaborated in the project—Lourdes Alonso Carrión. The urban scene training set consists on urban sketches done by multiple sketchers, including myself. As you can see in the video, we obtain hand-drawn detail of a tree by sketching its outline. In the case of the urban scenes, the bot tries to watercolor them.